The AI Engineer's Roadmap for 2026

It's been a while. I'm back with a clear plan for overwhelmed engineers. And the most important thing I've ever built for you.

If you’re an experienced software engineer looking at the AI landscape today, you’re probably feeling overwhelmed. It’s chaos. Every day brings a new model, a new framework, a dozen new tools, and a thousand conflicting opinions on social media. The pressure to “upskill or get left behind” is immense, but the path forward is buried in noise.

It all boils down to one, paralysing question: “Amid all this, where do I even begin?”

That question has been on my mind a lot. And I know, it’s been a while since we last spoke.

The truth is, giving you a real answer to that question required more than just another newsletter. As a solo founder managing this project, I realised that to provide a true, structured path out of the chaos, I couldn’t just write about it. I had to go away and actually build it.

That’s what I’ve been doing these past few months, pouring all my energy into building something bigger, something I believe is the most valuable answer I can give you.

So today, I’m back. And I promise this issue will more than make up for the silence.

It starts with a clear plan.

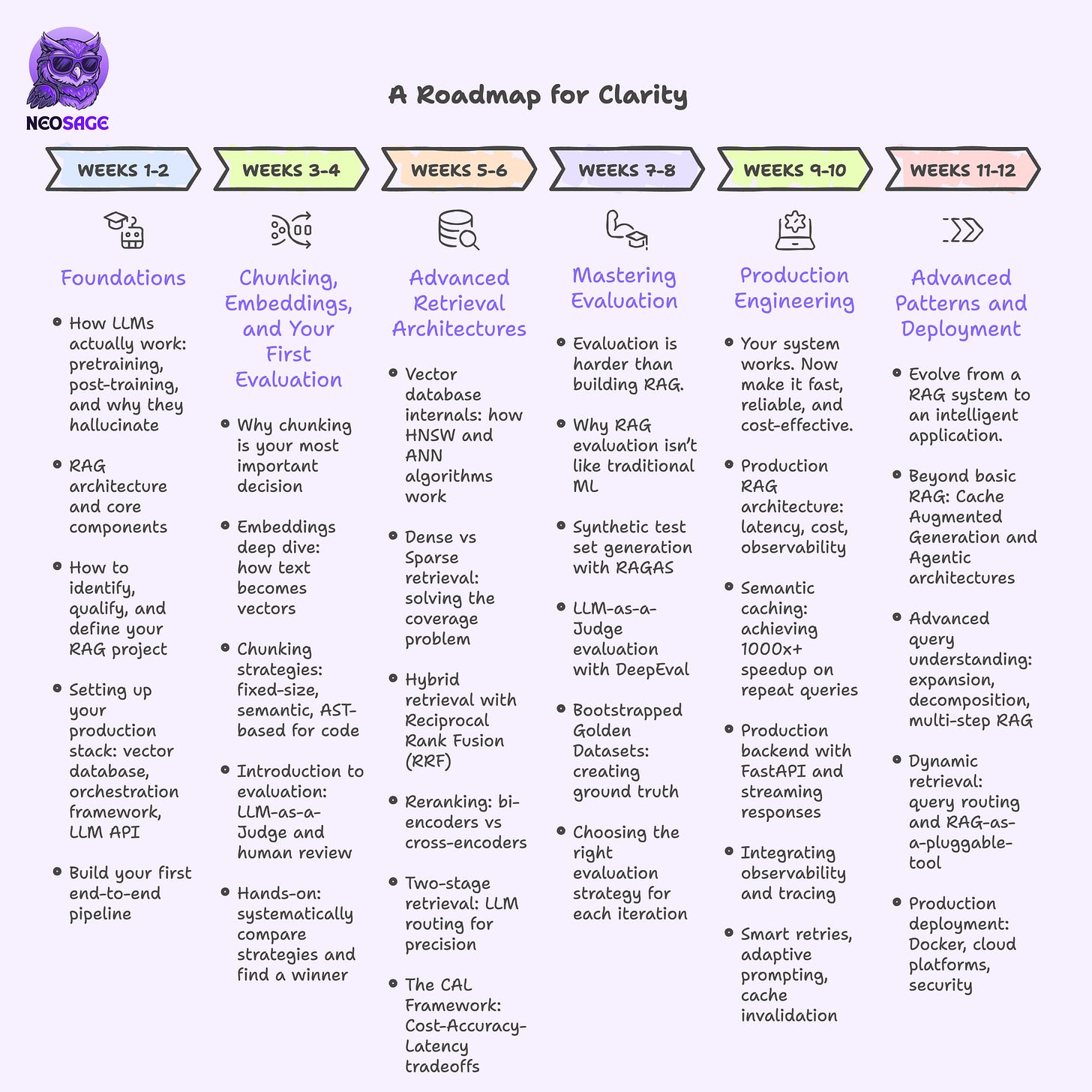

A Roadmap for Clarity

The feeling of being overwhelmed is a symptom of not having a map. This is that map.

This is the no-hype, no-shortcuts, 12-week plan I would give to any experienced software engineer who wants to stop chasing trends and start building real AI systems.

WEEKS 1-2: Foundations

How LLMs actually work: pretraining, post-training, and why they hallucinate

RAG architecture and core components

How to identify, qualify, and define your RAG project

Setting up your production stack: vector database, orchestration framework, LLM API

Build your first end-to-end pipeline

You’re not optimizing yet. You’re building intuition.

WEEKS 3-4: Chunking, Embeddings, and Your First Evaluation

Why chunking is your most important decision

Embeddings deep dive: how text becomes vectors

Chunking strategies: fixed-size, semantic, AST-based for code

Introduction to evaluation: LLM-as-a-Judge and human review

Hands-on: systematically compare strategies and find a winner

WEEKS 5-6: Advanced Retrieval Architectures

Vector database internals: how HNSW and ANN algorithms work

Dense vs Sparse retrieval: solving the coverage problem

Hybrid retrieval with Reciprocal Rank Fusion (RRF)

Reranking: bi-encoders vs cross-encoders

Two-stage retrieval: LLM routing for precision

The CAL Framework: Cost-Accuracy-Latency tradeoffs

WEEKS 7-8: Mastering Evaluation

Evaluation is harder than building RAG.

Why RAG evaluation isn’t like traditional ML

Synthetic test set generation with RAGAS

LLM-as-a-Judge evaluation with DeepEval

Bootstrapped Golden Datasets: creating ground truth

Choosing the right evaluation strategy for each iteration

No evaluation = shipping blind.

WEEKS 9-10: Production Engineering

Your system works. Now make it fast, reliable, and cost-effective.

Production RAG architecture: latency, cost, observability

Semantic caching: achieving 1000x+ speedup on repeat queries

Production backend with FastAPI and streaming responses

Integrating observability and tracing

Smart retries, adaptive prompting, cache invalidation

WEEKS 11-12: Advanced Patterns and Deployment

Evolve from a RAG system to an intelligent application.

Beyond basic RAG: Cache Augmented Generation and Agentic architectures

Advanced query understanding: expansion, decomposition, multi-step RAG

Dynamic retrieval: query routing and RAG-as-a-pluggable-tool

Production deployment: Docker, cloud platforms, security

The Starting Point: Why We Start with RAG

That’s the 12-week roadmap. It’s comprehensive, and looking at it, you might still feel like there’s a lot to learn. Still a lot, right?

The key isn’t to start everywhere at once. It’s to find the single point of maximum leverage: the one skill that unlocks the rest.

For any modern AI stack, that point is Retrieval-Augmented Generation.

This might seem counterintuitive. Isn’t RAG just for chatbots? Isn’t it just one small piece of that big roadmap?

No. That’s the great misunderstanding. RAG is not just a feature for Q&A; it’s the fundamental design pattern that makes almost every other advanced AI system function.

Let me show you. Once you see the pattern, you can’t unsee it:

Agentic Tool Use: How does an AI agent decide which of hundreds of tools to use? It performs retrieval. The user’s query is embedded and used to search a vector database of all available tool descriptions. The top matching tools and their API schemas are then retrieved and provided as context in the prompt, giving the LLM the exact information it needs to make a correct function call.

Long-Term Memory: When an assistant seems to “remember” you, it’s because your past conversations have been chunked, embedded, and stored in a vector database. When you speak, the system isn’t just looking at your last few messages; it performs a semantic search to retrieve the most relevant prior exchanges from weeks ago, giving the LLM a rich, long-term context.

Structured Data Access: A “Text-to-SQL” copilot doesn’t understand your entire database schema; it would drown in thousands of tables. Instead, it uses the user’s question to retrieve only the most relevant table schemas, column descriptions, foreign key relationships, and even sample rows. This curated “micro-schema” is then injected into the prompt, giving the LLM the exact context it needs to write an accurate query.

Adaptive Prompting: Even sophisticated prompting is often RAG in disguise. Instead of a static, hard-coded few-shot prompt, the system maintains a large library of high-quality examples. At runtime, it retrieves the examples that are most semantically similar to the user’s query to dynamically assemble the perfect prompt for the task at hand.

Retrieval is the mechanism we use to connect a static, pre-trained model to a dynamic, external world.

This is why we start with RAG. Mastering it doesn’t just teach you how to build a Q&A bot. It teaches you the core system design for applied AI. It is the highest-return investment of your time.

The First Great Challenge: “The Production Gap”

So, you’re convinced. RAG is the highest-leverage skill, the engine that powers modern AI. You decide to start there. You pick up a tutorial, copy the code, get a demo working with a sample PDF, and it feels like magic.

Then you point it at your own, real-world, messy data, and the magic vanishes. Your system breaks.

This is The Production Gap: the vast chasm between a tidy tutorial and a messy, production reality. The reason for this gap is that tutorials present RAG as a simple pipeline. Production RAG is not a pipeline; it’s a system of interacting layers, each with its own complex decisions.

Think of it like any other production system you’ve built. There’s a stack, and every layer matters:

1. The Data Layer: This is your foundation, and its quality dictates the performance of every other layer downstream.

Chunking: How do you break up your documents? If your chunk size is too small, the full context for an answer might be split across multiple, disconnected chunks. If it’s too large, you introduce too much noise for the retriever. Getting this wrong means the correct context is fundamentally impossible to retrieve in a single step.

Embeddings: Which model do you use? Each one creates a completely different “meaning space.” Changing your embedding model later isn’t a simple swap; it requires re-indexing your entire knowledge base, creating significant architectural lock-in.

2. The Retrieval Layer: This is the algorithmic core that surfaces information. The first lesson here is that semantic similarity doesn’t always mean relevance.

Retrieval Strategy: Do you use dense search for meaning, sparse search for keywords, or a hybrid approach to get the best of both?

Metadata Filtering: Do you enrich your vectors with metadata (like dates, sources, or customer IDs)? This allows you to apply hard filters before the semantic search, making retrieval more reliable and deterministic: for example, ensuring you only retrieve documents from ‘Q4 2025’ or for ‘Customer X’.

Scoring & Reranking: How do you score the results? Do you rely purely on vector similarity, or create custom scoring profiles that boost results based on recency or business rules? Do you add a second-stage reranker to improve precision?

3. The Orchestration & Generation Layer: This is the brain of your system, coordinating the other layers.

Query Handling: Do you use the user’s query as-is, or does your orchestration decompose a complex question into multiple sub-queries?

Prompt Engineering: How do you structure the prompt to force the LLM to actually use the provided context, especially when it’s noisy or contradictory? Which LLM do you use?

Interaction Pattern: Is it a single-shot process, or a multi-step one where you retrieve, generate, and then retrieve again?

4. The Evaluation Layer: This is the most critical and most often missing layer, the system’s feedback loop.

It answers the core question: How do you know if a change to your chunking strategy made things better or worse? Without a robust evaluation layer, you are flying blind. It’s what separates professional systems from amateur demos and involves building test sets, using LLM-as-a-Judge, and running regression tests to prevent silent failures.

Each of these isn’t just a one-time choice; it’s a decision with deep, coupled implications. This is the engineering rigour required. It’s not about finding one magic combination; it’s about understanding and navigating the tradeoffs at every layer of the stack. This is the hard part of AI that no one talks about.

Hoo, Sagers.

Your Owlthor’s been a bit... restless to face you after an unscheduled sabbatical. She told me to tell you she’s not thrilled about the break either, but don’t tell her I said this: it’s not her fault. She’s been nose-deep in something far bigger, something much more valuable for all of you builders. That’s why she couldn’t show up. Today, though (and she doesn’t know I’m saying this part yet), she will be sharing what she’s been working on.

Be nice, Sagers. The Owlthor is a solo founder, and frankly, she needs the coffee.

— Nocto

The Part Everyone Skips: Evaluation

Now, what’s the single most overlooked piece of that complex RAG system? The part that determines whether you’re building a reliable product or a demo that just feels right?

It’s evaluation.

The single hardest part of building production-ready RAG is evaluation. And it’s also the part that almost every course and tutorial ignores.

The tutorials assume you have a nice, clean, labelled dataset to test against. A perfect ground truth. But in the real world, you’re starting with a messy pile of documents and a stream of user questions. You have no ground truth. No labels. No test set.

So you have to build it yourself.

This is why true RAG mastery means constructing your own evaluation frameworks from scratch, using powerful techniques like:

Synthetic test sets: Using LLMs to generate realistic question-answer pairs directly from your documents. It’s fast, but you need to understand its blind spots.

LLM-as-a-Judge: Employing a powerful model to objectively score your system’s outputs. It’s incredibly useful, but you need to be aware of its biases.

Bootstrapped “golden datasets”: Starting with a small set of manually curated, perfect examples and then strategically expanding it to create a reliable, evolving ground truth for your specific domain. This is slow, but essential.

The maxim is simple: If you can’t measure it, you can’t improve it. In RAG, building the measurement system is half the work. Without it, you are shipping blind.

The Solution: Depth Over Breadth

So, how do you conquer that roadmap and cross the Production Gap without quitting your job and spending years on trial and error?

You’ve seen the chaos of the AI landscape. You’ve seen the layers of complexity involved in building a single, production-grade RAG system.

The answer isn’t to learn a little bit about 100 different AI tools. That’s just more noise, more overwhelm.

The answer is to master one, fundamental system so deeply that you gain the confidence and intuition to tackle any problem. The answer is depth over breadth.

This philosophy is why I paused the newsletter. I poured all my energy into building the one thing I believe is the ultimate structured path for an experienced engineer to break into AI: The Engineer’s RAG Accelerator.

This is a hands-on, 6-week accelerator designed to take you, an experienced software engineer, from feeling overwhelmed to building your first production-grade AI system with confidence.

It was built from the ground up to solve your biggest challenges:

“How can I master AI without quitting my day job?” The program is self-paced and designed for a 6-8 hour/week commitment, so you can master this new skill while effectively balancing your day job.

“What if I’m new to AI?” This cohort starts with core LLM fundamentals and RAG architecture, building your intuition from the ground up. If you have solid software engineering experience, you have all the prerequisites.

“I get stuck following tutorials by myself.” You won’t be alone. You get weekly 1-hour live Q&A sessions with me and daily support in our private cohort chat to get unstuck fast and learn from your peers.

“How do I build something that actually ships?” Most tutorials stop at ‘hello world’. We equip you with an industry-grade tech stack (Haystack, Qdrant, Gemini, Redis, FastAPI, Streamlit, Opik) and production-ready code templates so you can build and deploy applications that work.

“How do I know if my system is actually working?” We go deep on the part everyone else skips: evaluation. You will learn to build your own evaluation systems from scratch using RAGAS, DeepEval, and bootstrapped golden datasets.

“I need a real project for my portfolio.” You won’t just learn; you will build and deploy your own unique capstone RAG system, giving you a real-world project to showcase your new expertise.

“Will this be outdated in a year?” You get lifetime access to all course materials, code, and all future updates, ensuring this investment continues to pay off as the industry evolves.

I launched this to a small waitlist, and 65% of the 50 seats were taken in under 48 hours.

There are a few seats left, filling fast

I’m opening them now to you, my newsletter subscribers, first.

If you are an experienced software engineer who is tired of the hype and ready for a structured, hands-on path to building real, production-grade AI systems, this is for you.

You can learn more and claim one of the remaining spots here:

It feels good to be back.

Got questions? Hit me up!

Hope to see you inside.