The Illusion of Illusion... of AI?

A Builder's front row seat to the AI Reasoning Wars and what the Apple vs. Anthropic debate really means for those of us who build.

In the middle of the generative AI arms race, where every week, someone drops a new model with more neurons, more tokens, more “look what it can do!”, Apple stepped in.

Not with a model.

Not with an API.

But with a research paper.

And not just any paper, one with the wonderfully spicy title: “The Illusion of Thinking.”

Catchy. Slightly theatrical. And guaranteed to set AI Twitter on fire.

The paper doesn’t tiptoe around its point. It goes straight for the throat, claiming:

That today’s most advanced language models, yep, the ones acing benchmark leaderboards, aren’t actually reasoning. They’re just next-level pattern matchers pulling off a convincing magic trick.

That these models hit a hard ceiling. As soon as a puzzle crosses a certain complexity threshold, accuracy doesn’t just drop, it collapses. Like, falls-off-a-cliff collapses.

And most intriguingly, that these models, when faced with problems they could technically solve, just... give up. They don’t even try. They reduce their “thinking effort” and tap out early, despite having the token budget left to finish the job.

Needless to say, the media went bananas.

(Bananas for Apple. C’mon, you walked right into that one.)

Here’s how the headlines read:

“Apple Researchers Just Released a Damning Paper That…”

“Advanced AI suffers ‘complete accuracy collapse’”

“Apple says generative AI cannot think like a human”

That last one? XD.

I mean, if you thought your LLM could “think like a human,” that’s on you, my friend.

But let’s be real: headlines are for clicks, not clarity.

So let’s skip the spin, pour yourself a coffee (or something stronger, Nocto’s not judging), and dig into what the paper actually shows. Then we’ll see whether the claims hold up under real engineering scrutiny, or if this is just another case of academic dramatics.

Part 1: Apple's Case – Are LRMs Just Simulating Intelligence?

So, how exactly did Apple arrive at these claims?

To their credit, this wasn’t just another leaderboard stunt. The team behind the paper set out to rigorously test the reasoning capabilities of Large Language Models (LLMs) and Large Reasoning Models (LRMs) using a controlled, deliberately designed setup that avoids common benchmarking pitfalls. Here's a breakdown of how they approached the problem and what they found.

The Engineer’s Read: The Basis of the Claims

At the heart of the paper is a critique of existing reasoning benchmarks and a proposed alternative for evaluating reasoning in a more isolated, measurable way.

The Problem with Benchmarks

The authors argue that most benchmarks used to test reasoning (like math word problems or competitive coding datasets) are flawed due to data contamination; in other words, models may have seen similar examples during pretraining, making it unclear whether they are reasoning or simply recalling.

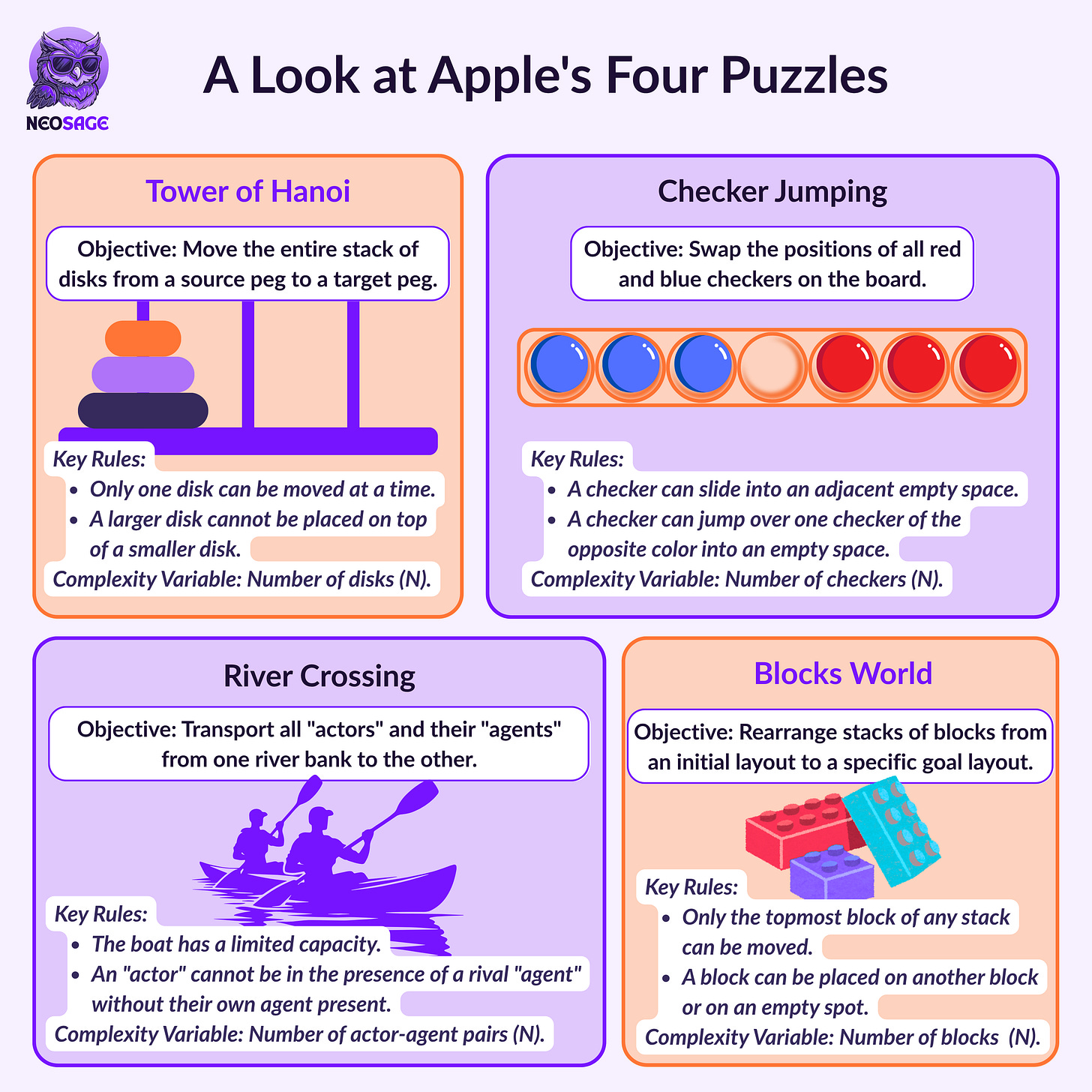

To remove this ambiguity, the authors designed a new testbed using four classic puzzles:

Tower of Hanoi, Checker Jumping, River Crossing, Blocks World

These were chosen for two reasons:

Controllability: The complexity of each puzzle can be precisely scaled using variables like the number of disks, agents, or steps.

Verifiability: Every move made by a model can be validated against a ground-truth simulator, allowing for exact evaluation, not just of the final answer, but the reasoning trace itself.

This setup allowed them to focus on whether models could solve a new, clean problem through reasoning, not retrieval.

Key Finding #1: The Three Regimes of Complexity

The study compared reasoning-enhanced models (e.g. Claude 3.7 Sonnet Thinking, DeepSeek-R1) with their standard counterparts that lack explicit reasoning traces.

They found that performance could be broken down into three distinct regimes, based on task difficulty:

Low-Complexity Regime (e.g., Tower of Hanoi with N < 5):

Simpler tasks were solved more efficiently and accurately by standard models. The reasoning overhead in LRMs provided no benefit and sometimes made performance worse.

Medium-Complexity Regime (e.g., Tower of Hanoi with N = 5–7):

This is where the LRMs showed their strength. Their structured reasoning traces helped them outperform simpler models.

High-Complexity Regime (e.g., Tower of Hanoi with N ≥ 8):

Across both model types, accuracy dropped sharply, often to zero. Even models designed for reasoning were unable to handle the increased compositional difficulty.

This was described in the paper as a “complete performance collapse”, suggesting that beyond a certain point, current models cannot generalise effectively in these domains.

Key Finding #2: The Scaling Limit and “Giving Up” Behaviour

This finding reveals a surprising pattern in how models allocate effort.

Initially, as tasks became harder, models increased their reasoning trace length, a sign that they were engaging in more step-by-step processing.

But once complexity entered the high regime, something changed. Despite having enough token budget left to continue, the models started producing shorter reasoning traces, effectively reducing their own effort.

This suggests a fundamental limitation in how models internally assess and respond to increasing complexity, not just a resource issue, but potentially an architectural one. The model seems to “decide” it’s not worth trying.

Key Finding #3: Analysis of Reasoning Traces and Inconsistencies

Looking deeper into model behaviour, the authors observed:

Overthinking on Easy Problems:

In simple puzzles, models often found a valid solution early in their trace but continued generating unnecessary or incorrect steps, indicating inefficient use of reasoning capacity.

No Clear Correlation Between Solution Length and Performance:

For example, models were able to execute 100+ sequential moves correctly in Tower of Hanoi but struggled with 5-step River Crossing puzzles.

Key Finding #4: The Failure of Algorithm Execution

Perhaps the most important finding, especially for builders, is what happened when models were explicitly provided with the correct solution logic.

In this experiment, researchers gave the models the exact recursive algorithm for solving the Tower of Hanoi puzzle, directly embedded in the prompt.

The result? No improvement.

Models still failed at the same complexity threshold.

This indicated that the failure isn’t just about the ability to devise an algorithm; it’s about the ability to execute a logically structured plan over multiple steps.

My Take: Separating the Signal from the Noise

Now, the real question: as builders, what should we actually take away from this?

What the paper is right about

Let’s start here, Apple is right about one thing: the way we evaluate models today needs serious work. Their push to go beyond contaminated benchmarks is exactly the kind of shift we need. Too many benchmarks reflect what a model might’ve already memorised from pretraining, not what it can genuinely reason through. Creating controlled testbeds like the ones in this paper is a step in the right direction, and a much-needed one.

But the idea that this somehow shatters the illusion of intelligence in today’s models? That’s where the paper starts to overreach.

Where it begins to crack

If you’ve ever actually built with these systems, you’ve seen this behaviour before. LLMs struggle with tasks they weren’t explicitly trained for. That’s not shocking, it’s expected.

Because let’s be real: these models are neural networks. What they’re really good at is pattern recognition. Even Reinforcement Learning, for all its flashiness, is still a form of statistical pattern shaping; it can produce impressive emergent behaviour, yes, but it’s not magic.

If a task or response format is new, unreinforced, or structurally unfamiliar, the model is likely to fail. Not because it isn’t “thinking” or “reasoning”, but because it wasn’t trained or incentivised to reason this way.

And what’s important: Apple’s setup didn’t just test abstract reasoning, it tested whether a model could reason and then output in a specific, (probably) non-incentivised format. That’s a big ask for any token predictor.

There’s also a human parallel worth noting. When people are presented with a complex, novel logic puzzle, they often fail, too, at least at first.

The key difference? We’re adaptive learners with the ability to learn on the fly (and mostly after the fact). We can Google it, watch someone solve it, or piece together a strategy from someone else’s prior experience. Models can’t. They’re frozen snapshots of past learning, not adaptive learners (and that’s the next frontier, to be honest)

And at the end of the day, these models are next-token predictors. That’s not just a technicality; it defines how they operate. They don’t “think” in plans or structured solutions. They think in tokens, one at a time, each choice guided by probabilities learned from their training data.

So when you ask a model to generate a single, perfect, long sequence of moves, you're setting up a statistical minefield.

This isn’t like solving a math problem where everything funnels toward a single, crisp answer. These puzzles require the model to explore a huge space of possibilities and commit to one flawless path, without deviation, all in one go.

But here’s the catch: every token generation is a probabilistic step. And because those probabilities are shaped by the entire soup of its pretraining data, even a slight nudge in the wrong direction, a faint echo of a similar pattern it once saw, can knock it off course. One small misstep, and the whole solution unravels.

And expecting a stochastic model to nail that path on the first try, misunderstands what it was trained to do.

So no, this doesn’t “debunk” the intelligence of LLMs. But the paper does surface two extremely important signals, especially if you're building agents or structured systems.

1. The failure to execute is a big deal.

This is the part we should be talking about more. When a model is handed a perfectly valid algorithm and still fails to follow it, this isn’t a reasoning failure, it’s a control failure.. It shows us that even when the strategy is in place, the model can’t consistently follow through. That has serious implications for any builder trying to create agents that follow structured plans or step-by-step workflows. (and that’s exactly why we need to engineer the systems around the models)

2. The “giving up early” behaviour is a mystery worth solving.

Why would a model stop reasoning halfway through a hard problem, even when it has tokens left? Is it a side effect of how we’ve trained them to prioritise brevity and confidence? A learned behaviour from RLHF that says “stop when you’re unsure”? Or is it something more nuanced?

Whatever the reason, it’s not just a random bug. It’s a consistent failure mode, and that’s worth investigating to push model capabilities forward.

Bottom line: while the headlines might overstate it, the paper gives us something to think about. But…. It doesn’t reveal an illusion, only some interesting blind spots.

Part 2: The Rebuttal — “The Illusion of the Illusion of Thinking”

The Peer Review, Served Cold (and Written by an LLM)

As the media went wild with “LLMs can’t think” headlines, the AI community braced for what usually follows a bold claim: a rebuttal. This one came swiftly, and with a title that might’ve earned a standing ovation in a research roast: "The Illusion of the Illusion of Thinking."

But they didn’t stop at the title. The paper’s authors? “C. Opus” and “A. Lawsen.” That’s not a coincidence. That’s Claude Opus, Anthropic’s own model, credited as the lead author. Which, let’s be honest, is a flex. Claude itself was claiming authorship, as if to say, “Not only can I reason, I’ll write the damn rebuttal.”

Deconstructing the “Collapse”: Anthropic’s Core Arguments

But underneath the flair, Anthropic presented a serious and methodical critique. Their key point? The collapse Apple observed wasn’t a failure of cognition; it was a function of how the experiments were designed.

They offer four central counterarguments:

1. The “Collapse” Was Caused by Token Limits, Not Necessarily Reasoning Gaps

The puzzles for instance Tower of Hanoi in Apple’s paper, required models to generate an exhaustive list of moves for N-disk problems, outputting every step in natural language. The number of moves needed grows exponentially with N (2^N - 1), and in text, that turns into quadratic or worse token growth due to verbosity.

Anthropic shows that the models’ accuracy drops off precisely at the point where this format exceeds the model’s max output token limit, usually around N=7 or N=8 (also as seen in Apple’s paper). Critically, models recognise this constraint in their own outputs, explicitly writing things like:

“The pattern continues, but to avoid making this too long, I’ll stop here.”

Which implies, this isn’t a failure of reasoning, it’s budget-aware truncation.

2. The Evaluation Included Mathematically Impossible Puzzles

This was the methodological red flag.

In the River Crossing domain, Apple included scenarios where models were tasked with solving unsolvable constraint problems. For instance, trying to get 6+ agents across a river with a boat that only holds 3, without violating constraints like leaving incompatible agents alone, is mathematically impossible.

Anthropic correctly points out that treating these instances as solvable and then penalising models for not solving them is invalid. It’s the equivalent of handing a SAT solver an unsatisfiable formula and marking it wrong for returning “unsatisfiable.” In these cases, failure was the correct answer.

3. Performance is Restored with a Better Solution Representation

To isolate the issue, Anthropic tested the same models on the same puzzles, but changed the output format. Instead of requiring full move-by-move lists, they asked the models to output a recursive Lua function that prints the solution when called.

The result? Claude-3.7-Sonnet, Claude Opus 4, OpenAI o3, Google Gemini 2.5 all completed the Tower of Hanoi puzzle with N = 15, well beyond the collapse point Apple observed, using under 5,000 tokens total.

This shows that the models can reason through the puzzle; the failure mode was the inefficiency of the output format, not necessarily a lack of logical ability.

4. The Metric for Complexity Was Misleading

Finally, Anthropic critiques Apple’s use of “compositional depth”, defined as the number of required moves, as a proxy for problem difficulty.

But here’s the issue: More steps ≠ harder.

Tower of Hanoi has an exponential solution length but a known, deterministic recursive pattern. It’s algorithmically trivial once the rule is learned.

River Crossing, on the other hand, involves constraint satisfaction, state tracking, and often multiple valid paths, making it search-intensive and NP-hard in complexity.

So when a model succeeds on a 127-step Hanoi solution but fails on a 5-move River Crossing, that’s not inconsistency, it’s a reflection of two entirely different computational regimes. One is execution-heavy, the other reasoning-heavy.

And so the complexity regime breakup in Apple’s paper is essentially flawed and incorrectly assumes that more moves generally make the puzzle harder to solve.

The Rebuttal's Playbook: The New Rules for Testing AI

So, where does the rebuttal leave us? Anthropic doesn't just tear down the original experiment; they conclude with a new set of rules for the road. They cap it off with a line that should be pinned on the wall of every AI lab: "The question isn't whether LRMs can reason, but whether our evaluations can distinguish reasoning from typing."

To that end, they propose a clear playbook for anyone serious about this work:

Stop Confusing Output with Understanding. An evaluation must be able to tell the difference between a model's core reasoning ability and its practical limits, like a finite context window. Don't penalise a model for being unable to write a million-word essay in a 100k-token box.

Your Benchmark Must Be Solvable. This one should be obvious, but here we are. Before you test a model, first verify that the problem you're posing isn't mathematically impossible.

Measure the Right Kind of "Hard." Stop using "solution length" as a lazy proxy for "difficulty." A truly useful metric must reflect the task's actual computational complexity, including the amount of search and planning required.

Test for Algorithms, Not Just Answers. To prove a model understands the how, you have to be flexible with the what. Test for algorithmic understanding by allowing for multiple solution representations, like generating code, not just by checking for one rigid, exhaustive output.

The Builder's Playbook:

4 Pillars for Working with "Reasoning" Models

So, after all the back-and-forth, what are the real, durable lessons for those of us in the trenches? It's not about picking a winner in the Apple vs. Anthropic debate (although my inclination is pretty clear by now). It's about upgrading our own mental models for how we build with these systems.

Here are the four pillars I believe matter most.

1. Stop Asking "Can It Think?" — Start Asking "Is It Reliable?"

The entire debate over whether a model is "thinking" is a philosophical distraction for an engineer. For a builder, the only question that matters is whether a model's behaviour is predictable, controllable, and reliable enough for a production system. The Apple paper, despite its methodological flaws, correctly identified that this reliability collapses under complexity. That collapse is a tangible engineering problem, whereas "thinking" is an academic one. Focus on what you can measure and control: reliability.

2. Treat "Thinking" as a Debuggable Interface, Not a Mind.

The Chain-of-Thought or "thinking" output from an LRM is not a window into a synthetic consciousness. It's a structured, debuggable API response. The Apple paper's most valuable contribution was using simulators to validate these traces step-by-step. The takeaway for us is to treat these thought processes as a powerful tool for observing failure modes. It's the most detailed error log you'll ever get. Use it to build external validation logic and to understand exactly where your system breaks.

3. Your Job Is to Find the Right Problem Representation.

The most important tactical lesson from the entire debate was Anthropic's "Representation Fix", asking for a function instead of a list. This should be elevated to a core engineering principle. Often, an engineer's most critical job when working with LLMs is not to build a better prompt, but to reframe the problem into a representation that the model can handle reliably and compactly. The model failing to write a 100,000-token answer is a limitation; the model succeeding at writing a 5,000-token function that generates that answer is a solution.

4. Build Your Own Verification Layer. Always.

If this debate taught us anything, it's that you can't blindly trust the model's output, and you can't blindly trust the public benchmarks used to evaluate it. The Apple paper used simulators to find failures. The Anthropic paper found that the benchmark itself was a failure. The unified lesson for builders is to trust neither. You must assume failure and build your own, domain-specific verification layers, just like the puzzle simulators, to check the model's output for correctness, safety, and format before it ever reaches a user or another production system.

A final word from Nocto, who's had too much coffee to care about headlines:

The world will argue about consciousness. You should be arguing about your test coverage. Only one of those ships a product.

Stay Dangerous. Hoot

References and Further Reading

Apple. The Illusion of Thinking

Anthropic. The Illusion of the Illusion of Thinking